Understanding Big Data

Defining Big Data

While “big data” lacks a rigid definition, it generally refers to datasets whose size, complexity, and velocity exceed the processing capabilities of traditional data management tools. These datasets often exhibit the following characteristics:

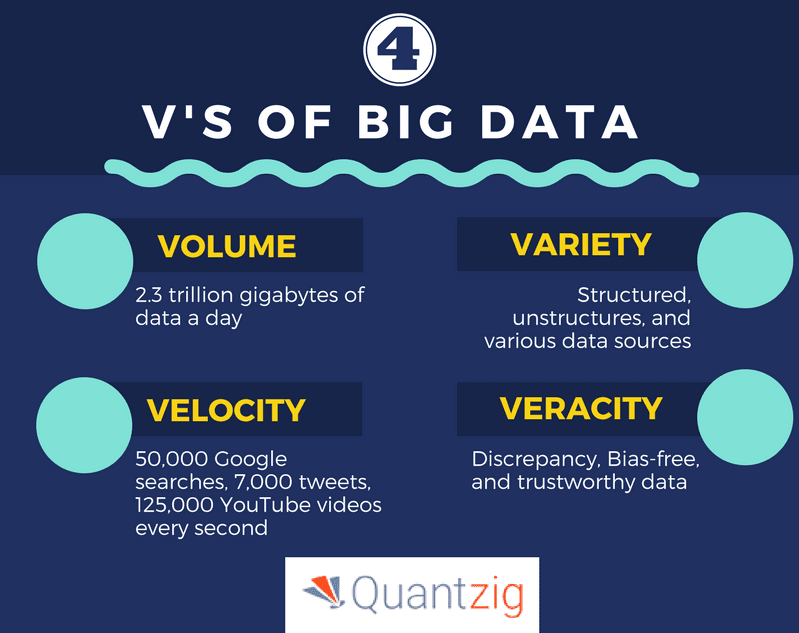

The Four V’s of Big Data

Big data is often characterized by its four defining traits, known as the four V’s:

- Volume: The sheer size of big data necessitates specialized storage and processing solutions. We’re talking petabytes or exabytes of data, generated from sources as diverse as scientific instruments, social media, and IoT sensors.

- Variety: Big data encompasses structured, semi-structured, and unstructured data. Text, images, audio, video, and sensor readings all contribute to the heterogeneity of big data, posing challenges for standardized analysis.

- Velocity: Data arrives at an unprecedented rate, often in real-time. Processing this data on the fly is essential for applications like fraud detection, algorithmic trading, and real-time analytics.

- Veracity: The accuracy, reliability, and trustworthiness of big data can vary. Data cleansing and validation are crucial steps in ensuring the integrity of insights derived from big data.

Technical Challenges in the Big Data Landscape

Big data comes with its fair share of technical hurdles:

- Storage: Distributed file systems like Hadoop Distributed File System (HDFS) and cloud-based object storage have emerged to address the storage demands of big data.

- Processing: Technologies like Apache Spark, MapReduce, and NoSQL databases provide the computational muscle needed to analyze big data efficiently.

- Data Integration: Combining data from disparate sources into a unified view remains a challenge.

- Real-Time Processing: Streaming analytics platforms like Apache Flink and Kafka enable real-time processing of big data streams.

Ethical and Societal Considerations

As we collect and analyze ever-increasing amounts of data, ethical considerations become paramount:

- Privacy: Protecting sensitive personal information is essential. Anonymization and encryption techniques are used to safeguard privacy.

- Bias: Big data analytics can perpetuate or amplify biases present in the data. It’s crucial to develop algorithms and models that are fair and unbiased.

- Transparency: Users should understand how their data is being used and the potential implications.